Positional Encoding as Spatial Inductive Bias in GANs (CVPR2021)

Motivation

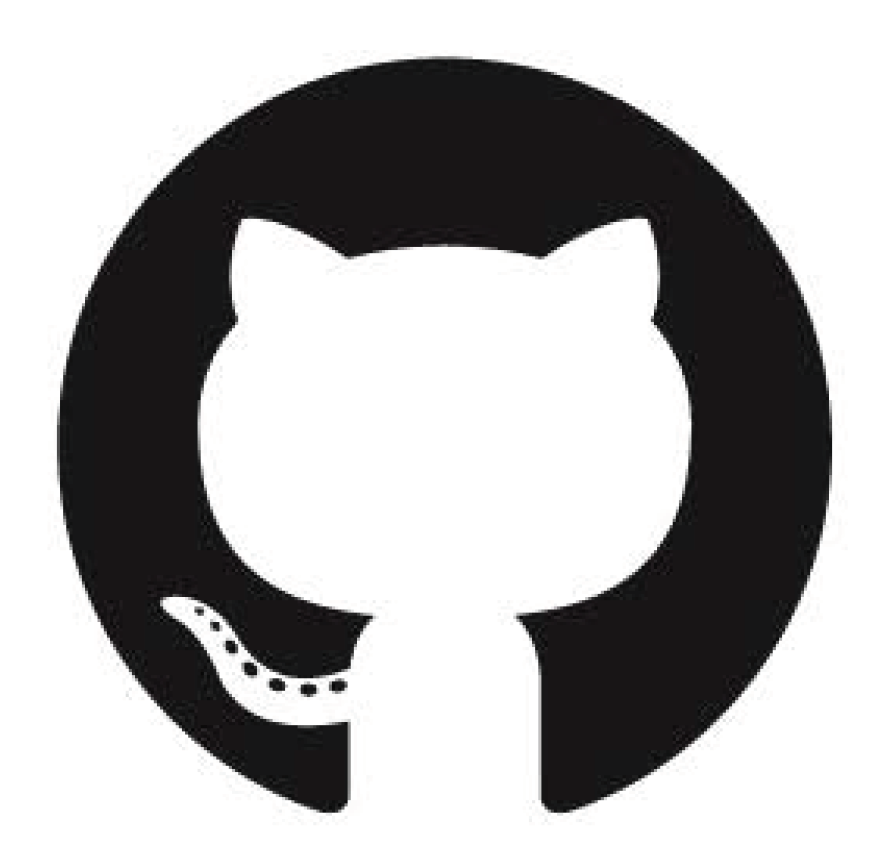

SinGAN shows impressive capability in learning internal patch distribution despite its limited effective receptive field. We are interested in knowing how such a translation-invariant convolutional generator could capture the global structure with just a spatially i.i.d. input. In this work, taking SinGAN and StyleGAN2 as examples, we show that such capability, to a large extent, is brought by the implicit positional encoding when using zero padding in the generators. However, such an implicit positional encoding introduces unbalanced spatial bias over the whole image space. To offer a better spatial inductive bias, we investigate alternative positional encodings and analyze their effects. The impact of positional encoding to the convolutional generator can be obviously observed in the following picture.

Investigation and Analyses

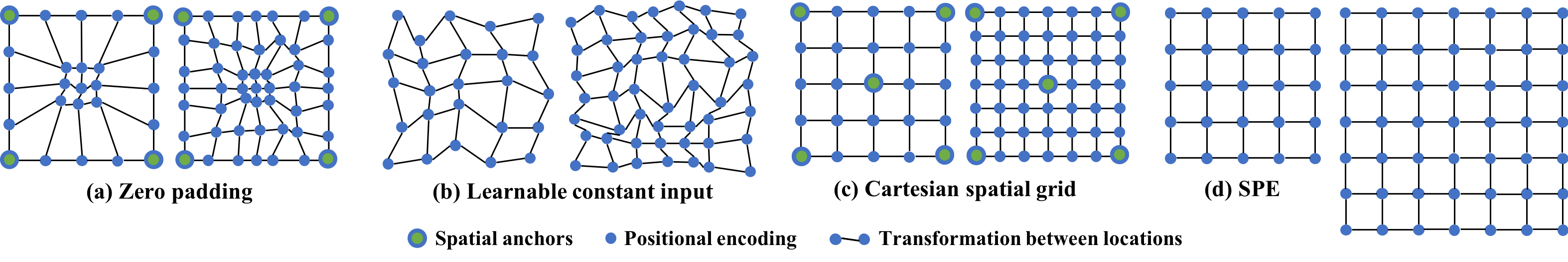

We study the implicit positional encoding brought by zero padding through detailed theoretical and empirical analyses. Meanwhile, explicit positional encodings are also investigated in this work for offering better spatial inductive bias to the translation-invariant convolutional generator. An intuitive illustration for the image space portrayed by different positional encodings is presented in this figure.

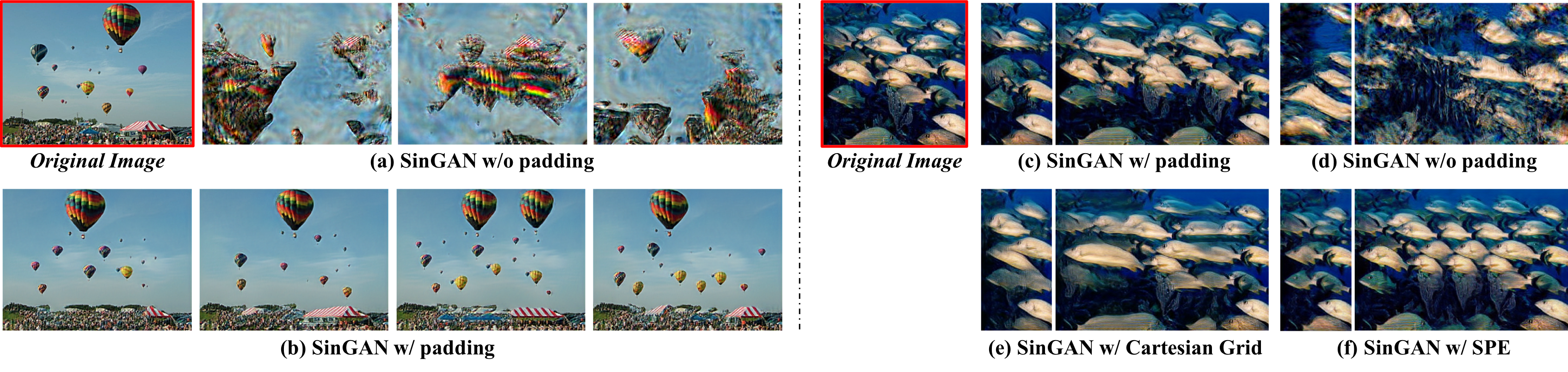

Application in StyleGAN2 and SinGAN

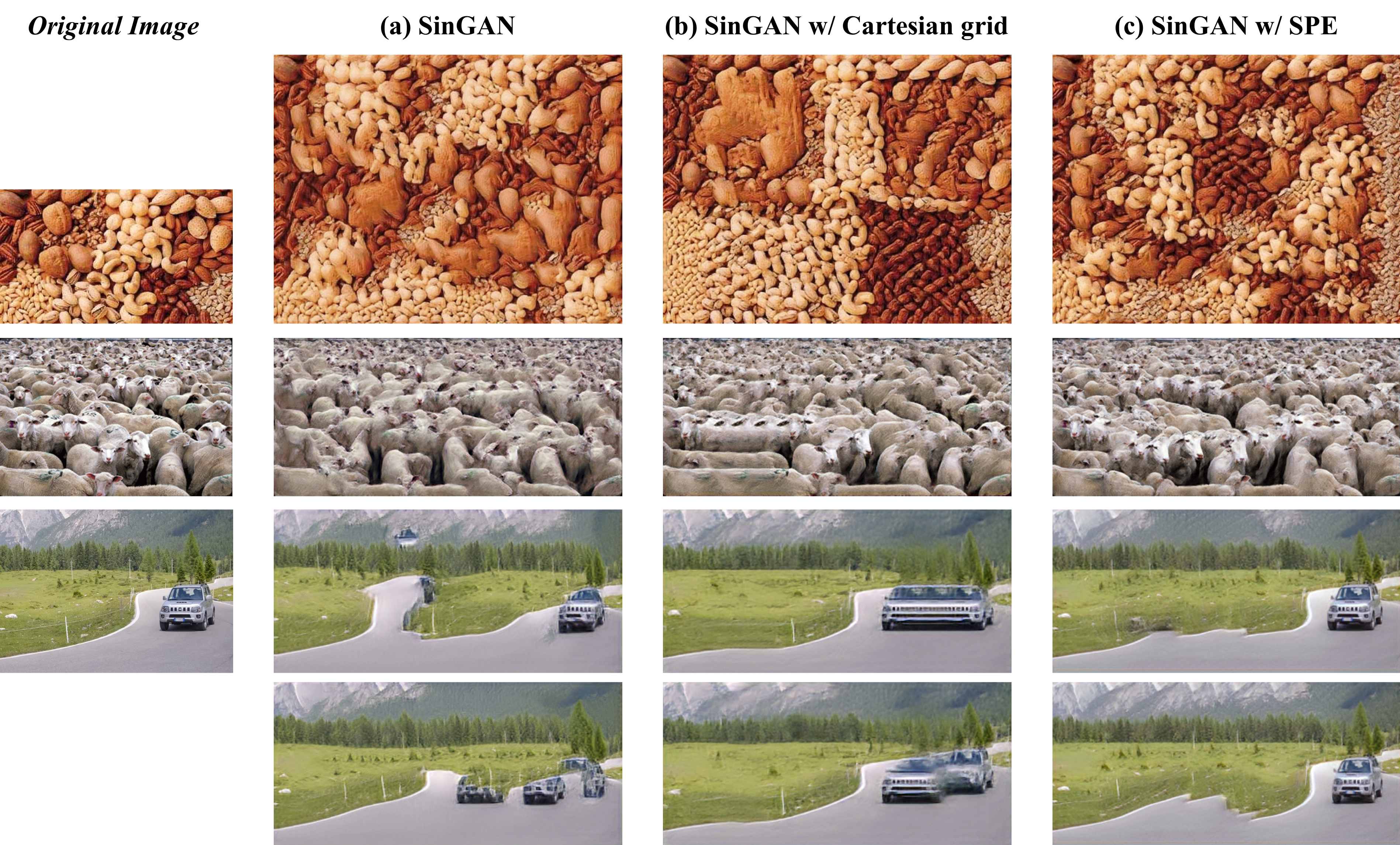

Based on a more flexible positional encoding explicitly, we propose a new multi-scale training strategy (MS-PIE) and demonstrate its effectiveness in the state-of-the-art unconditional generator StyleGAN2. As shown in this figure, with our MS-PIE, a single 256x256 StyleGAN2-C1 model can achieve compelling multi-scale generation quality at 1024x1024, 512x512, and 256x256 pixels (from left to right). Besides, we customize an image manipulation algorithm for the model trained in MS-PIE, where we can achieve high-reolution manipulation with a single 256x256 backbone.

Besides, the explicit spatial inductive bias substantially improve SinGAN for more versatile image manipulation and the robustness to the challenging cases. As shown in the last case, SPE can keep a reasonable spatial relationship between patches, where the width of the car is modified within a reasonable value. On the contrary, the Cartesian gird tends to stretch the object with the global structure retained by explicit spatial anchors.

Citation

@InProceedings{Xu_2020_Pos,

author = {Xu, Rui and Wang, Xintao and Chen, Kai and Zhou, Bolei and Loy, Chen Change},

title = {Positional Encoding as Spatial Inductive Bias in GANs},

booktitle = {arxiv},

month = {December},

year = {2020}

}

Contact

If you have any question, please contact Rui Xu at xr018@ie.cuhk.edu.hk.