|

He is currently a 4th-year PhD candidate in the Multimedia Laboratory, The Chinese University of Hong Kong. His supervisor is Prof. Xiaoou Tang, and he works closely with Prof. Chen Change Loy and Prof. Bolei Zhou. He got his B.Eng. degree in the Department of Electronic Engineering, Tsinghua University. |

|

|

He is interested in deep learning and its application for computer vision. He is now working on image/video inpainting and image synthesis. He has previous research experience in image/video segmentation, detection and instance segmentation. |

|

Rui Xu, Xiangyu Xu, Kai Chen, Bolei Zhou, Chen Change Loy Technical Report. [PDF], [Project Page] In this paper, we conduct a comprehensive empirical study to investigate the intrinsic properties of Transformer in GAN for high-fidelity image synthesis. Our analysis highlights the importance of feature locality in image generation. We also examine the influence of residual connections in self-attention layers and propose a novel way to reduce their negative impacts on learning discriminators and conditional generators. |

|

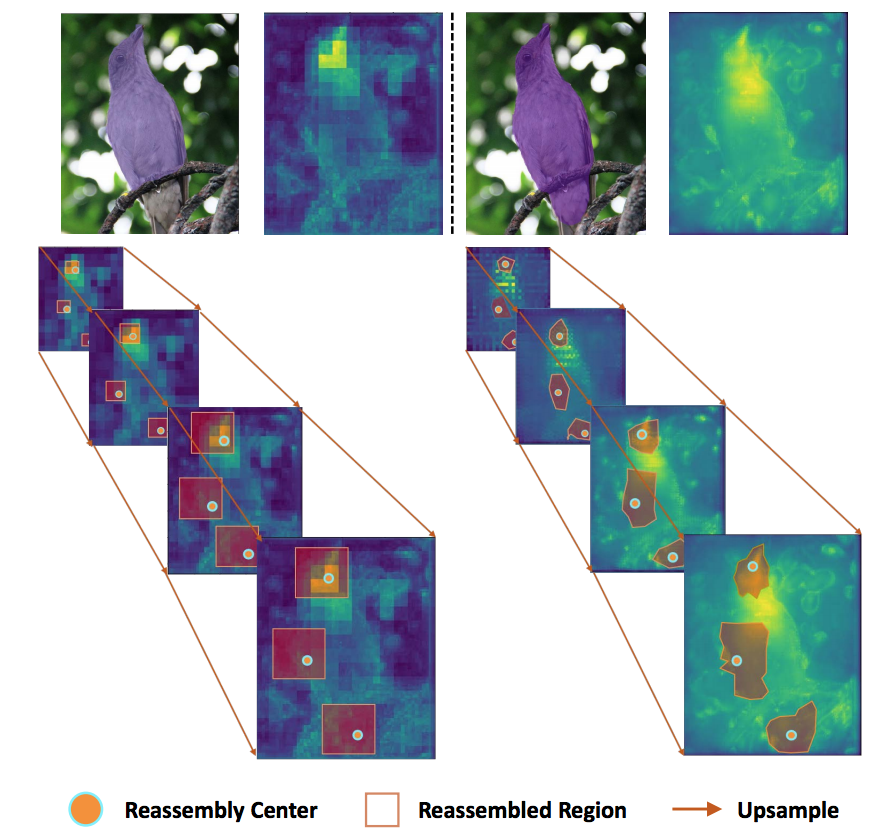

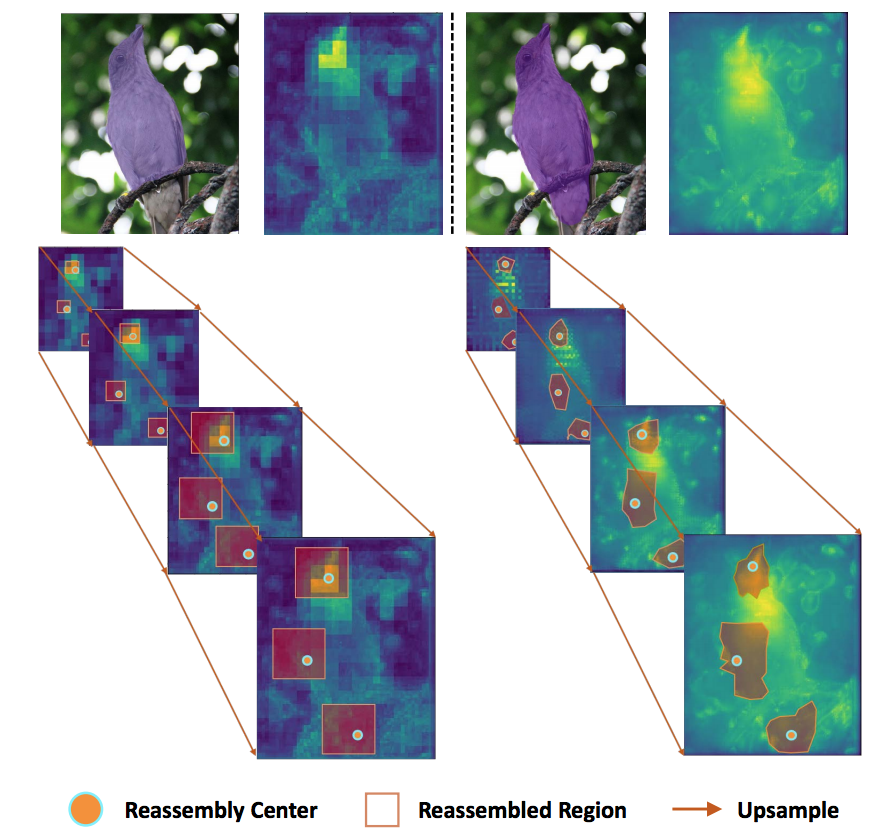

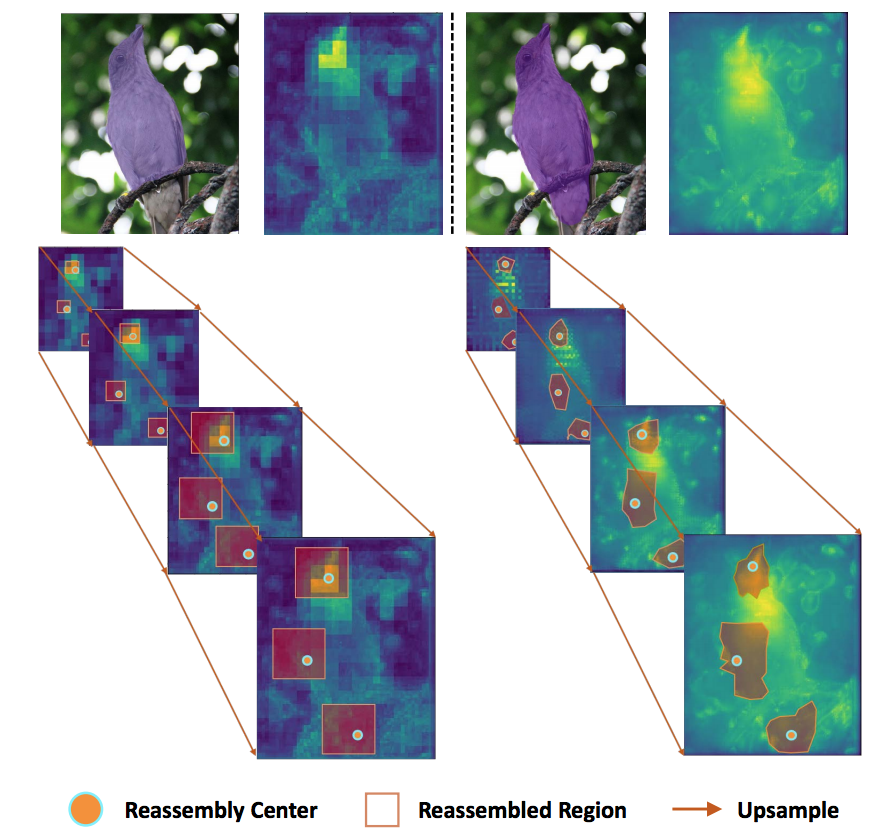

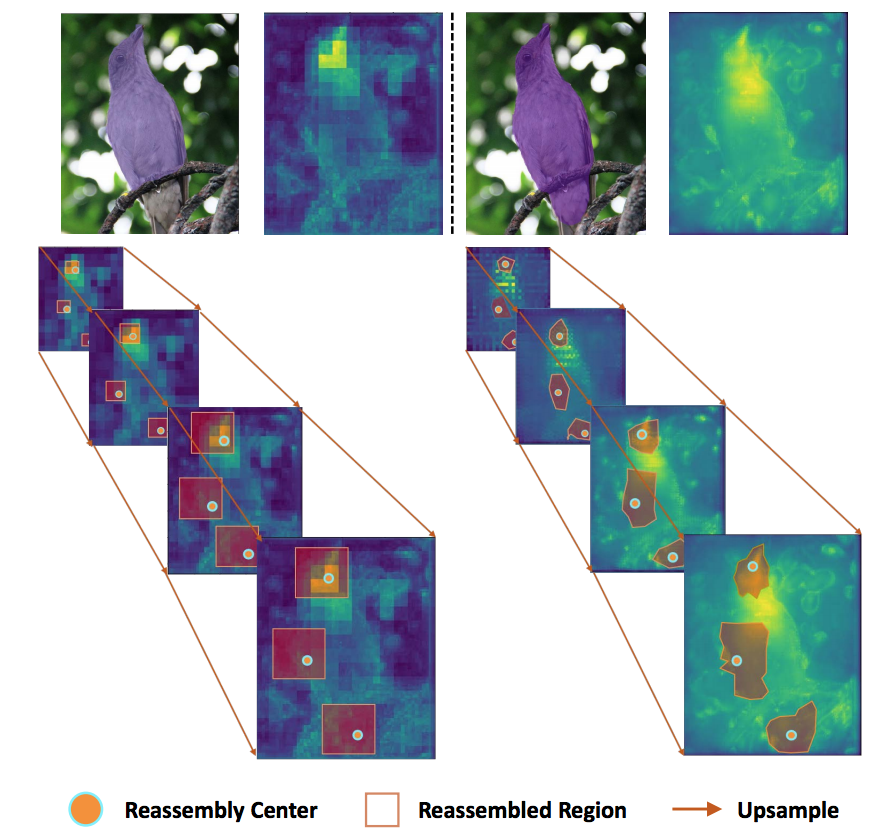

Jiaqi Wang, Kai Chen, Rui Xu, Ziwei Liu, Chen Change Loy, Dahua Lin T-PAMI [PDF] [Codes] We improve CARAFE in this work and offer a new universal, lightweight and highly effective feature reassemble operator, termed CARAFE++. It consistently boosts the performances on standard benchmarks in object detection, instance/semantic segmentation and inpainting. |

|

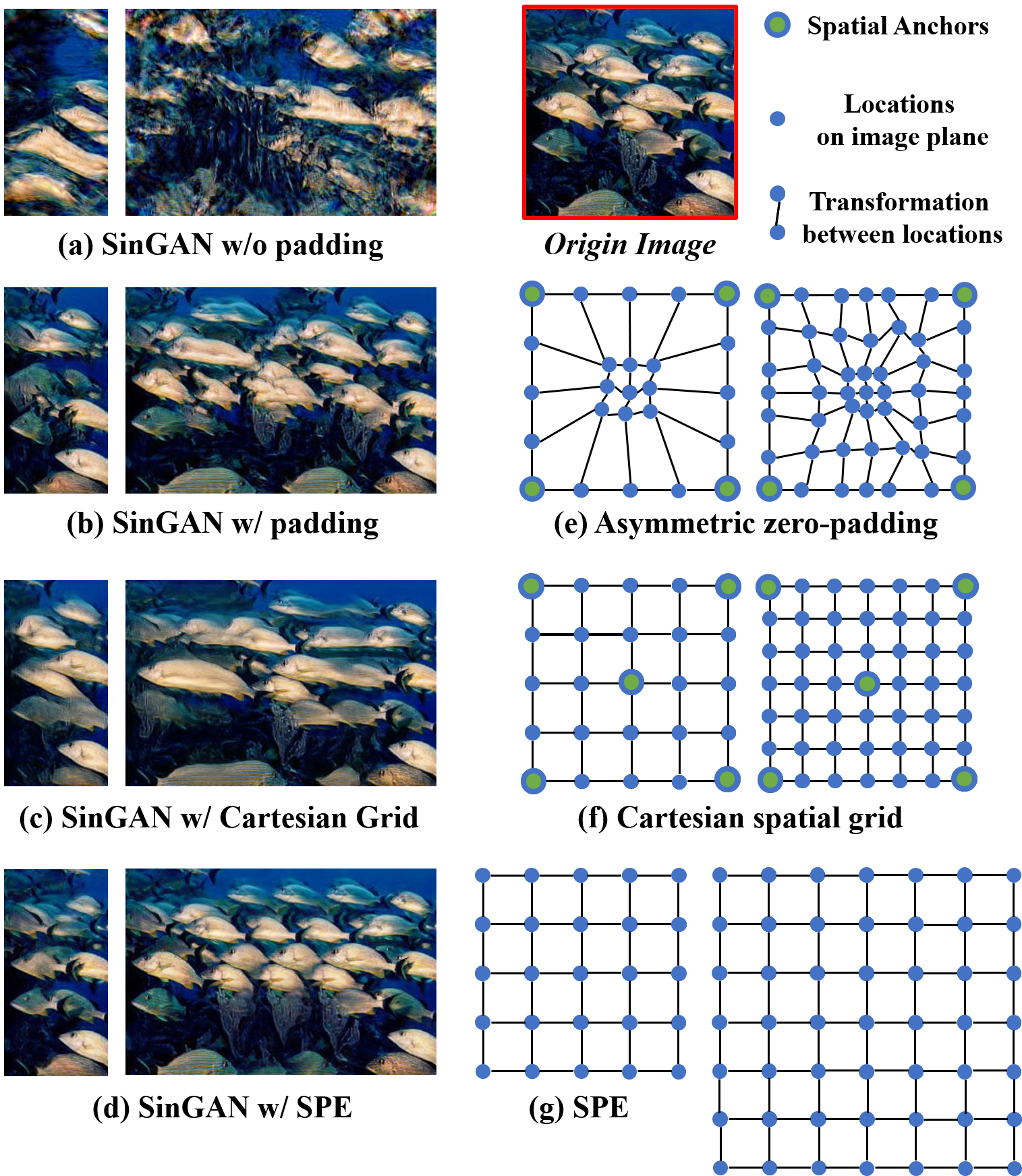

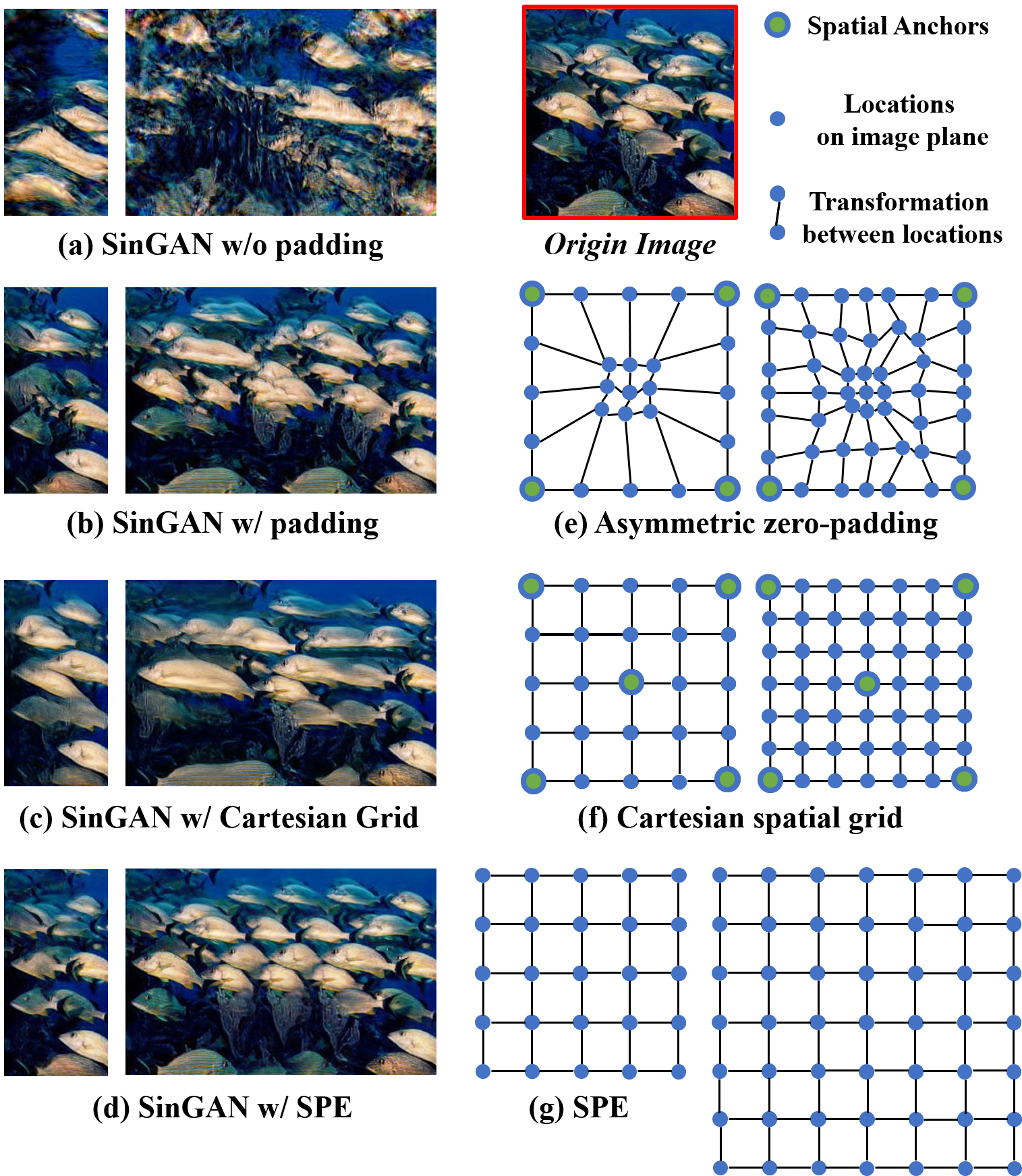

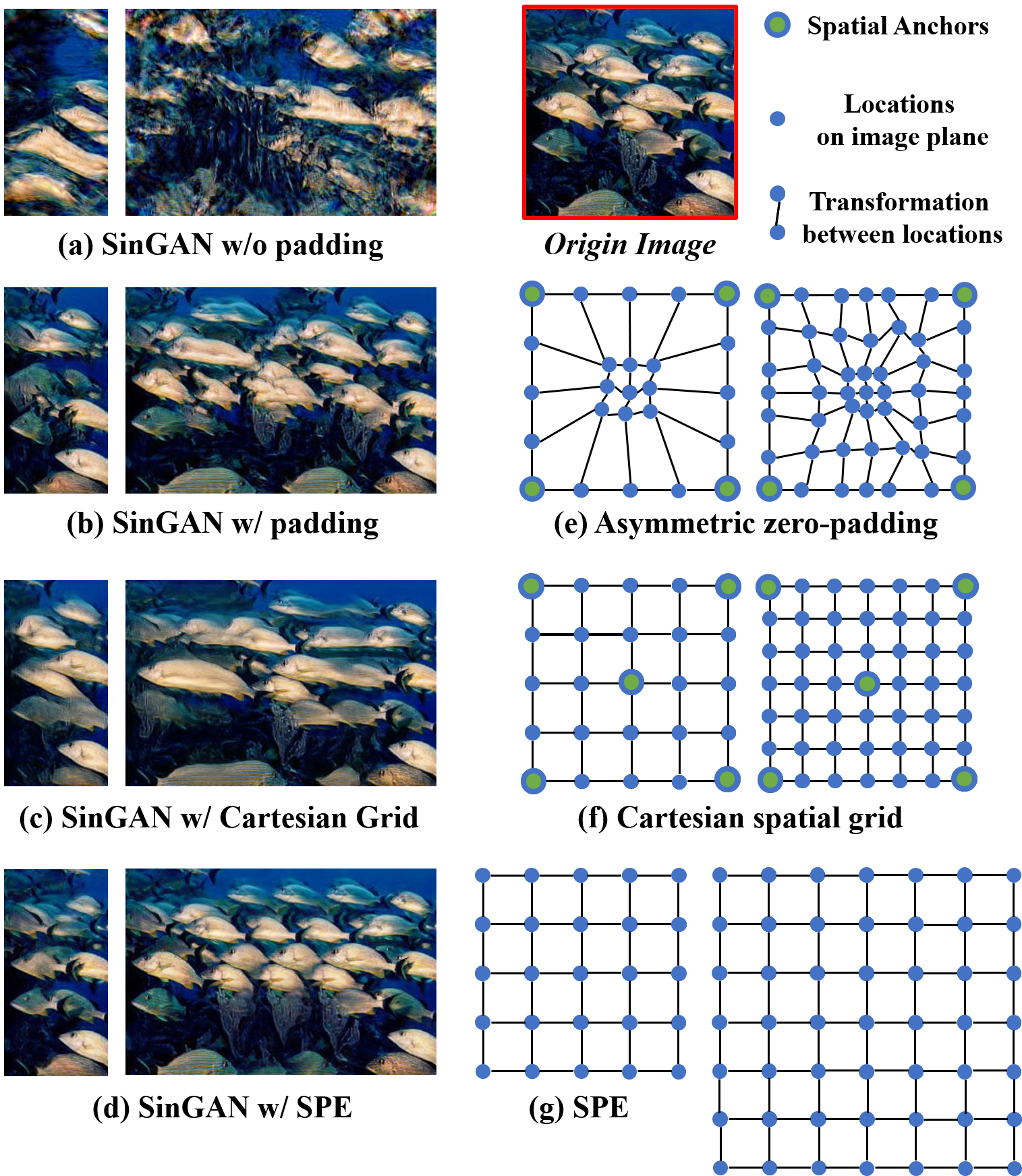

Rui Xu, Xintao Wang, Kai Chen, Bolei Zhou, Chen Change Loy CVPR, 2021 [PDF], [Project Page], [Code] We investigate the phenomenon of implicit poistional encoding serving as spatial inductive bias in current GANs. Based on explicit positional encoding, we provide a novel training strategy, named MS-PIE, to train a single multi-scale GAN model. |

|

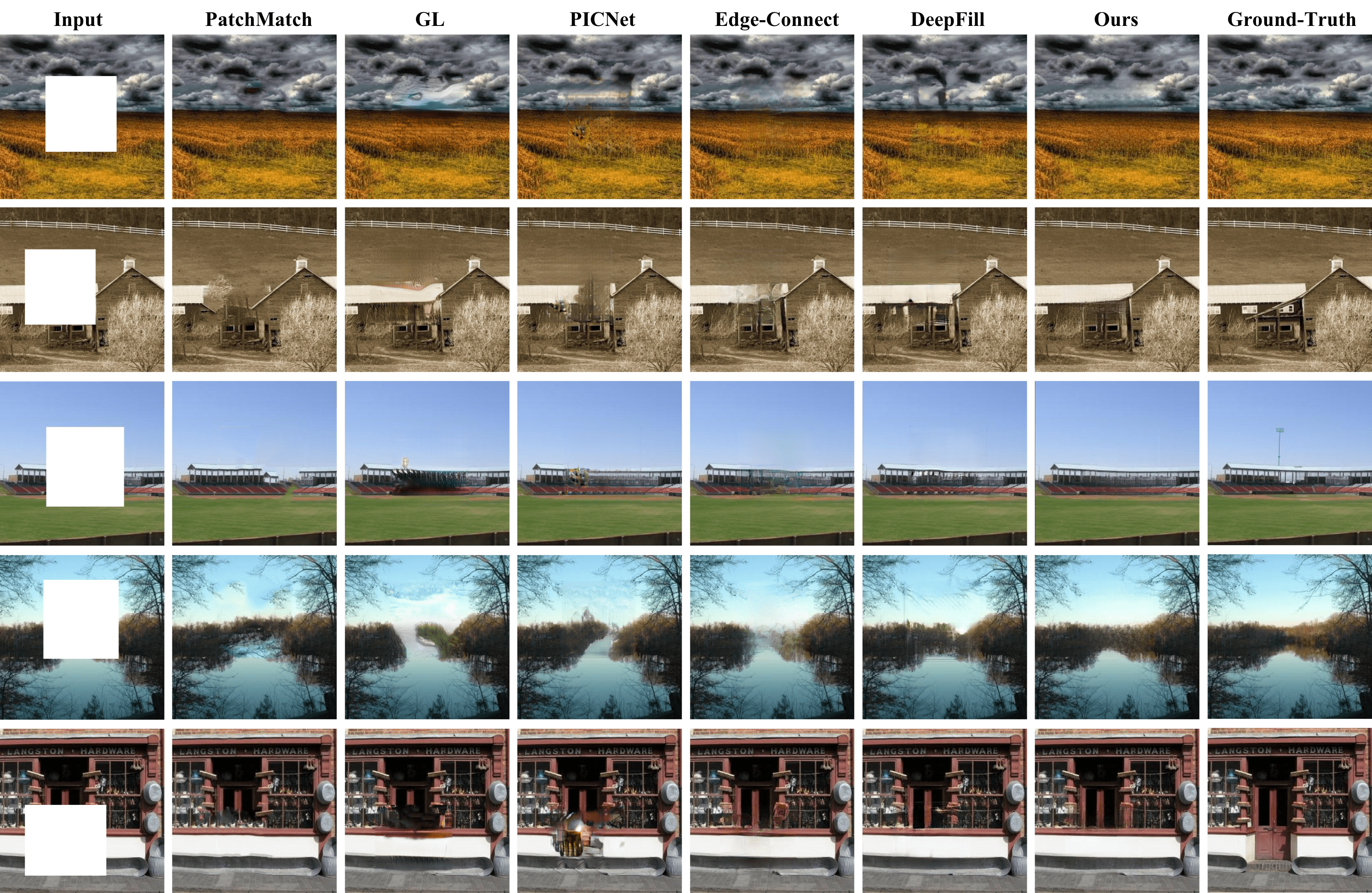

Rui Xu, Minghao Guo, Jiaqi Wang, Xiaoxiao Li Bolei Zhou, Chen Change Loy [PDF], [Project Page] Submitted to TIP We propose a new deep inpainting framework where texture generation is guided by a texture memory of patch samples extracted from unmasked regions. |

|

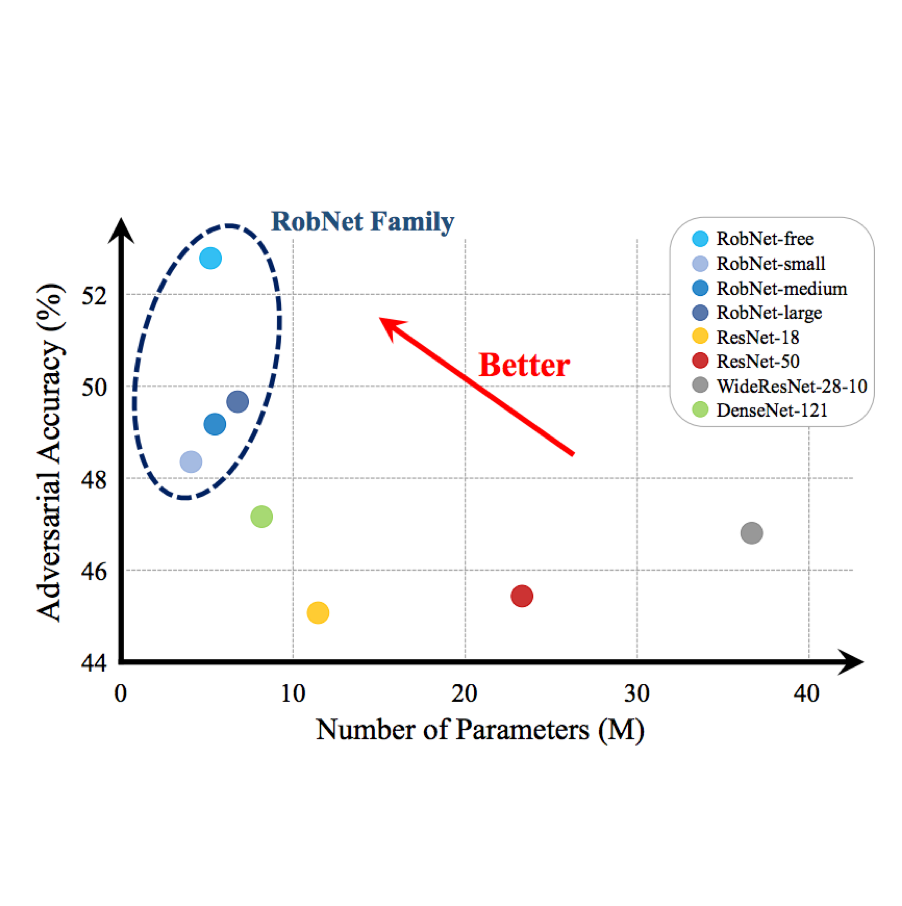

Minghao Guo, Yuzhe Yang, Rui Xu, Ziwei Liu CVPR, 2020 [PDF] |

|

Jiaqi Wang, Kai Chen, Rui Xu, Ziwei Liu, Chen Change Loy, Dahua Lin ICCV, 2019, (Oral) [PDF] [Codes] We have presented Content-Aware ReAssembly of FEatures (CARAFE), a universal, lightweight and highly effective upsampling operator. It consistently boosts the performances on standard benchmarks in object detection, instance/semantic segmentation and inpainting. |

|

Rui Xu, Xiaoxiao Li, Bolei Zhou, Chen Change Loy CVPR, 2019 [PDF] [Project Page] [Codes] We propose Deep Flow-Guided Video Inpainting, a simple yet effective approach to solve the problem of video inpainting. You can watch our video demo at this link. |

|

Thanks Jon Barron for sharing the website codes. |